The Sitemaps protocol allows a webmaster to inform search engines about URLs on a website that are available for crawling. A Sitemap is an XML file that lists the URLs for a site. It allows webmasters to include additional information about each URL: when it was last updated, how often it changes, and how important it is in relation to other URLs in the site. This allows search engines to crawl the site more intelligently. Sitemaps are a URL inclusion protocol and complement robots.txt, a URL exclusion protocol.

The Sitemaps protocol allows a webmaster to inform search engines about URLs on a website that are available for crawling. A Sitemap is an XML file that lists the URLs for a site. It allows webmasters to include additional information about each URL: when it was last updated, how often it changes, and how important it is in relation to other URLs in the site. This allows search engines to crawl the site more intelligently. Sitemaps are a URL inclusion protocol and complement robots.txt, a URL exclusion protocol.

Sitemap are the best way to sent notification of your website’s update to different Search Engine. Bots of different Search Engines like Google Bot crawl a website on regular basis and update indexes for the new information. But the way these bots crawl a website and the frequency of crawling remains unpredictable. Thus, if you post new information on your website, it is not sure when will that information is indexed by search engine bots. By generating Sitemap and submitting to search engines such as Google, we can ensure that the update is picked up by the bots at its earliest.

Almost all the content management systems (like Drupal, WordPress, Joomla etc) have plugins to generate sitemap dynamically. All you have to do is just to configure such plugins/addons in your website’s content management system and that’s it.

There are lots of Online tools which can generate sitemap of your website for free. One of such tool is XML-Sitemaps.com. All you have to do is just give your website’s URL to this tool and it will generate a sitemap for all the links/pages of the website.

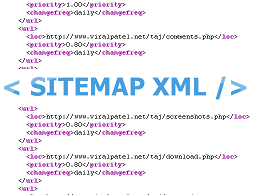

Structure of Sitemap XML

| Element | Required? | Description |

|---|---|---|

| <urlset> | Yes | The document-level element for the Sitemap. The rest of the document after the ‘<?xml version>’ element must be contained in this. |

| <url> | Yes | Parent element for each entry. The remaining elements are children of this. |

| <loc> | Yes | Provides the full URL of the page, including the protocol (e.g. http, https) and a trailing slash, if required by the site’s hosting server. This value must be less than 2,048 characters. |

| <lastmod> | No | The date that the file was last modified, inISO 8601 format. This can display the full date and time or, if desired, may simply be the date in the format YYYY-MM-DD. |

| <changefreq> | No | How frequently the page may change:

‘Always’ is used to denote documents that change each time that they are accessed. ‘Never’ is used to denote archived URLs (i.e. files that will not be changed again). This is used only as a guide for crawlers, and is not used to determine how frequently pages are indexed. |

| <priority> | No | The priority of that URL relative to other URLs on the site. This allows webmasters to suggest to crawlers which pages are considered more important.The valid range is from 0.0 to 1.0, with 1.0 being the most important. The default value is 0.5. Rating all pages on a site with a high priority does not affect search listings, as it is only used to suggest to the crawlers how important pages in the site are to one another. |

Thanks for tips!

Thank you for your great knowledge.

@Dekit – You welcome :)

Hi Viral,

Can you please write tutorial on creating sitemap.xml and robots.txt file in spring mvc+hibernate+Spring Batch.